Optical character recognition using deep recurrent attention model

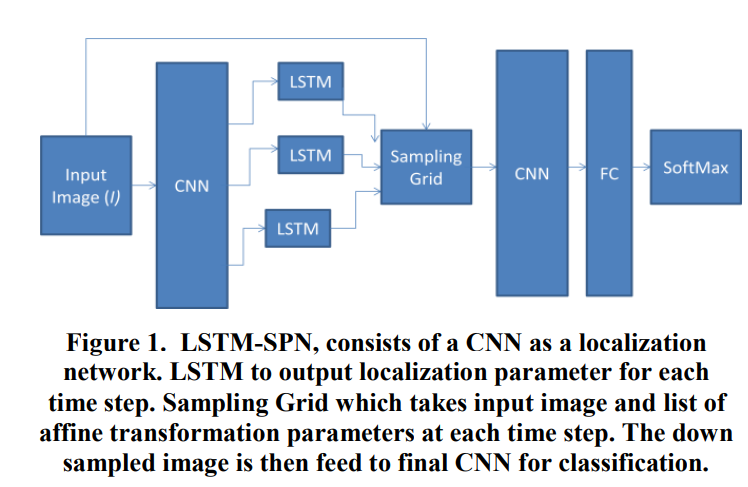

We address the problem of recognizing multi-digit numbers in optical character images. Classical approaches to solve this problem include separate localization, segmentation and recognition steps. In this paper, an integrated approach to multi-digit recognition from raw pixels to ultimate multi class labeling is proposed by using recurrent attention model based on a spatial transformer model equipped with LSTM to localize digits individually and a subsequent deep convolutional neural network for actual recognition. The proposed method is evaluated on the publicly available SVHN dataset where it achieves enhanced recognition accuracy per individual digits and in complete street numbers. Further evaluation on the cluttered MNIST dataset shows results comparable to state of the art techniques but with simple and computationally effective network architecture. © 2017 Association for Computing Machinery.