Body and visual sensor fusion for motion analysis in Ubiquitous healthcare systems

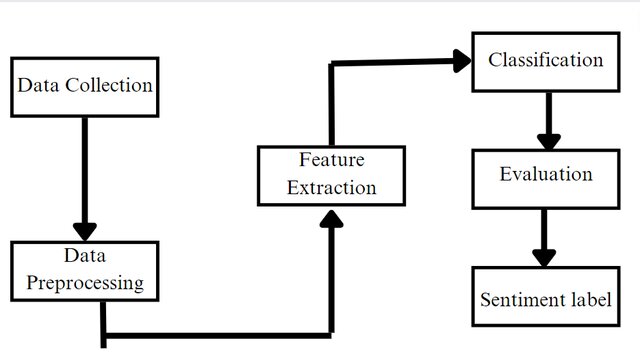

Human motion analysis provides a valuable solution for monitoring the wellbeing of the elderly, quantifying post-operative patient recovery and monitoring the progression of neurodegenerative diseases such as Parkinson's. The development of accurate motion analysis models, however, requires the integration of multi-sensing modalities and the utilization of appropriate data analysis techniques. This paper describes a robust framework for improved patient motion analysis by integrating information captured by body and visual sensor networks. Real-time target extraction is applied and a skeletonization procedure is subsequently carried out to quantify the internal motion of moving target and compute two metrics, spatiotemporal cyclic motion between leg segments and head trajectory, for each vision node. Extracted motion metrics from multiple vision nodes and accelerometer information from a wearable body sensor are then fused at the feature level by using K-Nearest Neighbor algorithm and used to classify target's walking gait into normal or abnormal. The potential value of the proposed framework for patient monitoring is demonstrated and the results obtained from practical experiments are described. © 2010 IEEE.