Multimodal Video Sentiment Analysis Using Deep Learning Approaches, a Survey

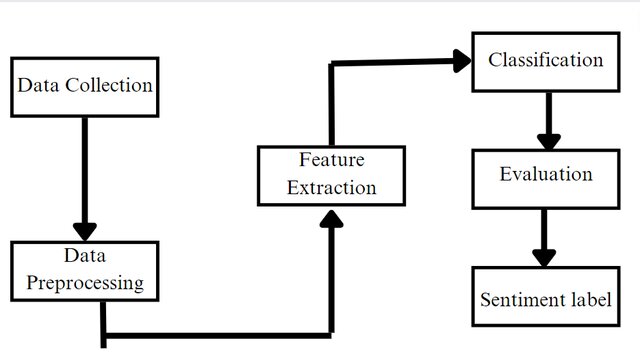

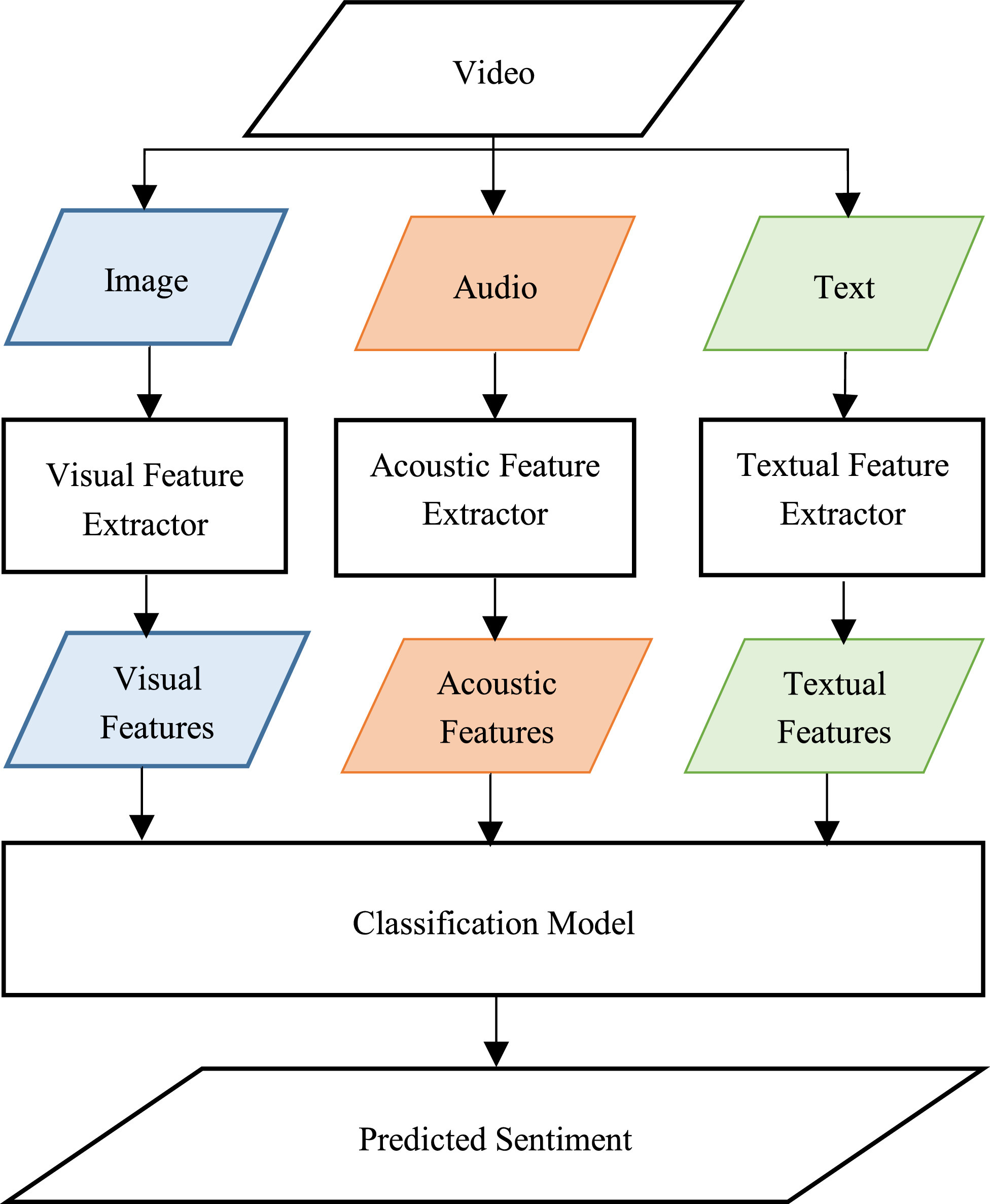

Deep learning has emerged as a powerful machine learning technique to employ in multimodal sentiment analysis tasks. In the recent years, many deep learning models and various algorithms have been proposed in the field of multimodal sentiment analysis which urges the need to have survey papers that summarize the recent research trends and directions. This survey paper tackles a comprehensive overview of the latest updates in this field. We present a sophisticated categorization of thirty-five state-of-the-art models, which have recently been proposed in video sentiment analysis field, into eight categories based on the architecture used in each model. The effectiveness and efficiency of these models have been evaluated on the most two widely used datasets in the field, CMU-MOSI and CMU-MOSEI. After carrying out an intensive analysis of the results, we eventually conclude that the most powerful architecture in multimodal sentiment analysis task is the Multi-Modal Multi-Utterance based architecture, which exploits both the information from all modalities and the contextual information from the neighbouring utterances in a video in order to classify the target utterance. This architecture mainly consists of two modules whose order may vary from one model to another. The first module is the Context Extraction Module that is used to model the contextual relationship among the neighbouring utterances in the video and highlight which of the relevant contextual utterances are more important to predict the sentiment of the target one. In most recent models, this module is usually a bidirectional recurrent neural network based module. The second module is an Attention-Based Module that is responsible for fusing the three modalities (text, audio and video) and prioritizing only the important ones. Furthermore, this paper provides a brief summary of the most popular approaches that have been used to extract features from multimodal videos in addition to a comparative analysis between the most popular benchmark datasets in the field. We expect that these findings can help newcomers to have a panoramic view of the entire field and get quick experience from the provided helpful insights. This will guide them easily to the development of more effective models. © 2021 Elsevier B.V.